How Drones Finally Learned to Follow Directions: The VLFly Revolution

Hey there, fellow tech enthusiast! Welcome to our TechBit series by VECROS.

Imagine you’re at a party, and someone says, “Hey drone, go grab that backpack over there by the funky lamp.” In the old days, your drone might just spin in circles like a confused Roomba on caffeine, LOL! but not anymore! Enter VLFly (Vision-Language Fly), a slick new framework that lets drones understand human chit-chat and zip through the world like pros. This gem comes from a fresh arXiv paper that’s basically yelling, "Drones + AI = Magic!”

We’re talking about making drones navigate using just words, a camera, and some smart AI tricks. No fancy GPS or laser eyes needed, just pure brainpower from language models and vision tech. Buckle up, because we’re diving into this like a drone into a wind tunnel. We will break it down with fun analogies to understand it better.

The Drone Drama: Why Navigation is a Hot Mess!!!

Picture this: Drones are awesome for spying on your neighbor’s BBQ or delivering pizza (in theory ![]() for now). But throw in real-world chaos; furniture, trees, that random cat and suddenly path planning turns into a nightmare. Traditional methods? They’re like following a treasure map drawn by a toddler: rigid, can’t handle surprises, and forget about understanding “go to the red backpack” if it’s not pre-programmed.

for now). But throw in real-world chaos; furniture, trees, that random cat and suddenly path planning turns into a nightmare. Traditional methods? They’re like following a treasure map drawn by a toddler: rigid, can’t handle surprises, and forget about understanding “go to the red backpack” if it’s not pre-programmed.

Enter Vision-Language Navigation (VLN) for UAVs. It’s all about blending words (language instructions) with what the drone sees (vision) to plot a path. But UAVs have it tough they’re flying in 3D space, dealing with wind, and their “eyes”, a single camera or multi (like in our ATHERA), give funky perspectives compared to ground robots. Past systems relied on discrete actions like “turn left 90 degrees,” which is about as flexible as a brick.

VLFly flips the script: continuous velocity commands, open-vocabulary understanding, meaning it gets abstract words without training on them, and zero-shot generalization which works in new places without re-learning. It’s like teaching your drone English overnight!

The ‘Eureka’ Moment: What Makes VLFly Tick

So, how does this wizardry work? VLFly is built like a three-layer cake: tasty, layered, and oh-so-satisfying. It uses big AI guns, Large Language Models (LLMs) and Vision-Language Models (VLMs) to turn chit-chat into flight paths.

-

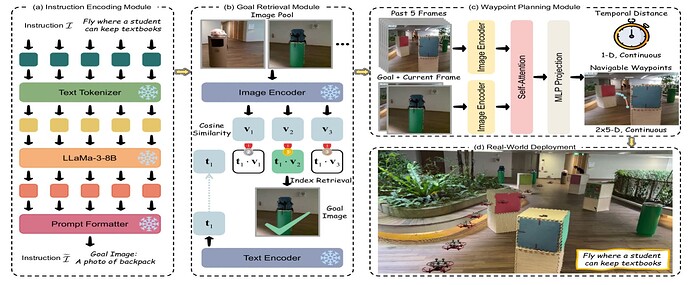

Instruction Encoding Module: This is the translator buddy. It takes your sloppy command like “Fly to the backpack near the window” and uses an LLM (think LLaMA) to spit out a clean prompt: “Goal Image: a photo of backpack.” Why? Because drones don’t speak human; they need structured vibes to match words to pics.

-

Goal Retrieval Module: Now the fun part, matching that prompt to what the drone sees. Using CLIP (a VLM), it compares the word prompt to a bunch of pre-snapped images from the environment. It’s like playing “hot or cold” in a shared dream space: cosine similarity scores pick the closest match. Boom, now the drone knows exactly what “backpack” looks like in real life.

-

Waypoint Planning Module: The action hero. It stacks up recent camera views (egocentric observations) and the goal image, runs them through a Transformer (fancy neural net), and predicts waypoints, basically “go here next” points. Then, a PID controller, simple feedback loop, turns those into smooth velocity commands: speed up, turn left a bit, don’t crash. No discrete steps; it’s fluid like a bird dodging raindrops.

The whole thing runs in real-time on just a monocular camera. No maps, no ranging sensors, pure AI inference. They frame it as a POMDP: Partially Observable Markov Decision Process, which sounds scary but is just "the world is half-hidden, make smart guesses."

My Favorite Bit: Open-Vocabulary Magic and Real-World Wins

What blows my mind? The open-vocabulary goal understanding. Drones aren’t limited to “apple” or “car”, they handle “funky lamp” or “that weird sculpture” because the VLM gets semantics from tons of internet data. In tests, VLFly nailed 83% success on direct instructions and 70% on indirect ones, like hints instead of specifics, in real rooms with cubes and clutter.

Simulations? Crushed baselines like Seq2Seq and PPO (reinforcement learning champ). real world? outdoors with changing light, still flying high at 70-80% success. Trajectories show elegant obstacle dodges, like a pro skier!

Limitations (because nothing’s perfect, not even drones)

Okay, reality check: VLFly isn’t invincible. It needs a pre-collected image pool for goals, though small, like 100 pics. Abstract instructions can trip it up if too vague like “go to happiness” won’t fly (pun intended). Outdoor dynamics like wind aren’t fully tested, and long flights might drift without extra sensors. Still, compared to clunky old methods, it’s a leap. future tweaks? add wind compensation or language-to-action chaining.

Wild Speculations: Drones That Chat Back?

Imagine drones not just following orders but asking clarifying questions: “Which backpack, the red one?” Or integrating with bigger AI for swarm navigation, a fleet mapping a forest on voice commands. With agentic AI rising, VLFly could be the wingman for search-and-rescue or delivery bots that actually understand “avoid the dog”

This paper’s a teaser for AI-robotics mashups, if we scale VLMs more, drones might predict paths like chess masters, anticipating obstacles before they appear!!

TL;DR

| Aspect | Old-School Navigation | VLFly Awesomeness |

|---|---|---|

| Input | Fixed commands, maps | Natural language, camera only |

| Actions | Discrete turns | Continuous velocity (smooth operator) |

| Generalization | Meh, needs retraining | Zero-shot to new spots |

| Success Rate | 40-60% in tests | 70-83% real-world wins |

| Cool Factor | Basic path planning | Open-vocab, like mind-reading |

[1]Reference: arXiv link - go checkout!

If this sparked your curiosity, do comment ![]()

Footnotes ↩︎