Drones Level Up: When Agentic AI Turns UAVs into Flying Brainiacs!

Hey there, fellow tech enthusiast! Welcome to our TechBit series by VECROS.

Over the past decade, drones have evolved from remote-controlled devices to increasingly autonomous tools. At first, autonomy meant little more than pre-programmed waypoint navigation or obstacle avoidance. But the next step in this trajectory is what researchers are calling

agentic AI: systems that give drones the ability to reason, plan, and adapt in ways that go beyond rigid programming. A recent survey paper, “UAVs Meet Agentic AI: A Multidomain Survey of Autonomous Aerial Agents” (Sapkota et al., 2025), provides a systematic overview of this shift.

The paper[1] focuses on how agentic AI is transforming unmanned aerial vehicles (UAVs) into systems that can perceive, decide, and act with greater flexibility. The implications are broad: from agriculture and disaster response to logistics and environmental monitoring.

In this blog, I want to unpack the core ideas from the paper, drawing out the conceptual upgrades that agentic AI provides. I’ll contrast traditional UAV architectures with agentic ones, examine the technical layers involved, survey applications across domains, and reflect on challenges and future directions. The goal is not just to summarize, but to highlight how these developments fit into broader trends in AI autonomy, much like how reinforcement learning has evolved from game-playing to real-world control.

The Existential Upgrade: From Dumb Drones to AI Overlords

Traditional UAVs operate much like scripted automatons: they follow predefined paths, react to immediate sensor inputs, and rely heavily on human oversight. If an unexpected obstacle appears, a sudden gust of wind or shifting debris, they often fail or require intervention. Agentic AI, by contrast, endows UAVs with a form of agency: the ability to perceive the environment, reason about goals, control actions dynamically, and communicate with other agents. In a disaster zone, a traditional drone might map a fixed grid, potentially missing critical areas. An agentic UAV, however, could prioritize regions based on inferred survivor locations, reroute around obstacles in real-time, and coordinate with ground robots, adapting to uncertainty as a human responder might.

The Beauty Under the Hood: How Agentic AI Works Its Magic

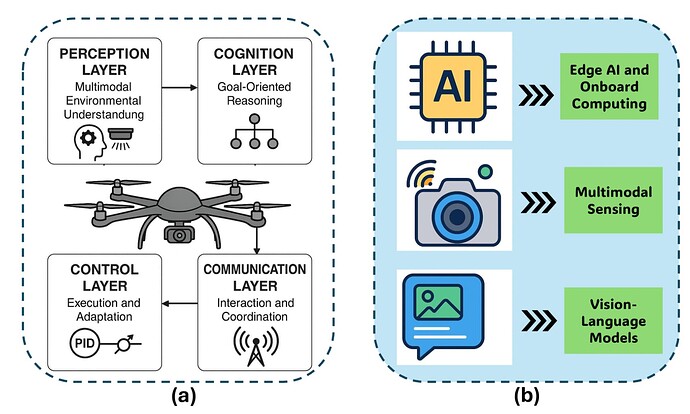

The paper frames this evolution through four interconnected layers:

-

Perception: Integrating multimodal sensors (e.g., RGB cameras, LiDAR, thermal imaging) via AI models like convolutional neural networks (CNNs) or transformers to construct a coherent world model.

-

Cognition: Employing reinforcement learning (RL) for decision-making under uncertainty, or vision-language models (VLMs) to interpret natural language commands like “map the flooded river area.”

-

Control: Using techniques such as model predictive control (MPC) to execute plans, optimizing trajectories while accounting for dynamics like energy constraints or environmental disturbances.

-

Communication: Leveraging vehicle-to-everything (V2X) protocols for swarm coordination, allowing UAVs to share maps or delegate tasks.

These layers form a hierarchical architecture, where higher-level cognition informs lower-level actions, enabling robustness in unstructured settings.

Key Technical Advances in Core Tasks

The paper delves into how agentic AI enhances three fundamental UAV tasks: mapping, navigation, and path planning.

-

Mapping: Traditional approaches rely on static grids, but agentic systems use AI-boosted simultaneous localization and mapping (SLAM) to fuse sensor data into dynamic 3D models. This handles moving obstacles or changing lighting, much like how a navigator updates a mental map during exploration.

-

Navigation: VLMs bridge language and vision, grounding commands in the environment. A drone instructed to “avoid the red barn” can interpret and detour accordingly, reducing the need for precise coordinates.

-

Path Planning: RL excels here, treating the environment as a Markov decision process where the agent learns optimal policies through simulated trials. Rewards encourage efficient, safe routes; penalties deter collisions. Compared to classical methods like A* search, which assume perfect knowledge, RL adapts to partial observability, anticipating “moves” from nature, such as wind or wildlife.

In cluttered spaces, RL can reduce energy consumption by 20-30% by optimizing for multiple objectives simultaneously.

Real-World Wins: Drones Conquering Domains

The survey covers seven domains where agentic UAVs demonstrate clear advantages:

-

Precision Agriculture: AI analyzes normalized difference vegetation index (NDVI) from multispectral imagery to detect crop stress, planning targeted spraying paths. This minimizes waste and boosts yields, extending human oversight in vast fields.

-

Disaster Response: RL prioritizes search areas, SLAM builds real-time maps amid rubble, and swarms coordinate coverage, enabling faster monitoring of wildfires or floods than manual methods.

-

Environmental Monitoring: Thermal cameras track biodiversity over large areas, with RL optimizing trajectories to maximize efficiency. Applications include poacher detection, preserving ecosystems.

-

Other Domains: Logistics benefits from swarm deliveries; infrastructure inspections use AI for damage detection; security patrols navigate threats autonomously.

Empirical findings suggest agentic AI increases flexibility by 2-3x, cuts human intervention by up to 80%, and supports beyond visual line of sight (BVLOS) operations safely.

TL;DR

| Aspect | Traditional UAVs | Agentic AI UAVs |

|---|---|---|

| Mapping | Static grids | Dynamic 3D SLAM with fusion |

| Navigation | GPS-dependent | VLM-guided, context-aware |

| Path Planning | Rigid algorithms | RL-adaptive optimization |

| Autonomy Level | Operator-reliant | Goal-oriented, adaptive |

The Not-So-Funny Parts: Challenges and Limitations

Agentic AI is not without flaws. Hardware constraints, limited battery life and onboard compute, hinder long missions, though edge AI mitigates some issues. AI models can suffer from opacity (black-box decisions), hallucinations in VLMs, or poor generalization to novel environments. Regulatory hurdles for BVLOS add friction, as do ethical concerns like privacy in surveillance or access inequities for smaller operators.

The paper proposes mitigations: federated learning for privacy, hybrid hardware designs for endurance, and explainable AI to demystify decisions. Out-of-distribution failures, e.g., mistaking a squirrel for a landmark, highlight the need for robust training.

Future Vibes: Self-Evolving Drone Utopias?

Looking ahead, the paper envisions lifelong learning, where UAVs refine models over time, and integrated ecosystems with ground agents and IoT. Energy-aware planning could enhance sustainability, while accessible interfaces (e.g., voice commands) promote equity. In a decade, UAVs might negotiate airspace dynamically or adapt routes based on real-time data feeds. This survey provides a foundation for these advancements, bridging theory and practice.

For those interested, checkout reference.

Peace out, and may your drones always fly high. ![]() If this sparked your curiosity, do comment

If this sparked your curiosity, do comment ![]()

[1]Reference : https://arxiv.org/html/2506.08045v1

Footnotes ↩︎